Rui Wang

AlumniTechnical University of MunichSchool of Computation, Information and Technology

Informatics 9

Boltzmannstrasse 3

85748 Garching

Germany

Fax: +49-89-289-17757

Office:

Mail: rui.wang@in.tum.de

This is my old webpage which is not maintained since March 2021.

Please visit my new website here.

Brief Bio

I received my Bachelor's degree (2011) in Automation from Xi'an Jiaotong University, and my Master's degree (2014) in Electrical Engineering and Information Technology from the Technical University of Munich. From 2014 to 2016 I worked as a computer vision algorithm developer for advanced driver assistance systems (ADAS) at Continental. Since March 2016 I am a PhD student in the Computer Vision Group at the Technical University of Munich, headed by Professor Daniel Cremers. In 2018 I joined Artisense, a startup co-founded by Professor Cremers, as a PhD student and senior computer vision & AI researcher. My research interests include visual SLAM and visual 3D reconstruction, as well as their combinations with semantic information.

Find me on Google Scholar, LinkedIn, Strava (highly research related).

Research Interests

VO and vSLAM

- Stereo DSO This video shows some results of our paper "Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras" accepted by ICCV 2017. (Project Page)

- SLAM extension to Stereo DSO After the ICCV 2017 deadline, we extended our method to a SLAM system with additional components for map maintenance, loop detection and loop closure. Our performance on KITTI is further boosted a little, as shown by the plots in the video. (Project Page)

- LDSO: Direct Sparse Odometry with Loop Closure In this project we integrate feature points into DSO to improve the repeatability of the sampled points, enabling loop closure candidate detection using BoW and loop closure based on pose graph optimization. For more details and access to the code, please visit the Project Page.

Large-scale Relocalization

- DH3D "DH3D: Deep Hierarchical 3D Descriptors for Robust Large-Scale 6DoF Relocalization" (ECCV 2020, spotlight). Please visit the Project Page for code and related materials. (The video is with audio.)

Bring Semantic Information into vSLAM

- DirectShape This video shows the basic idea and some results of our paper "DirectShape: Photometric Alignment of Shape Priors for Visual Vehicle Pose and Shape Estimation". In this work, we estimate the 3D poses and shapes of cars based on a single stereo image pair. Note that the point clouds in the video are only for visualization purpose, they are not used in our method. For more details please refer to the Project Page.

Robustify VO with Deep Learning

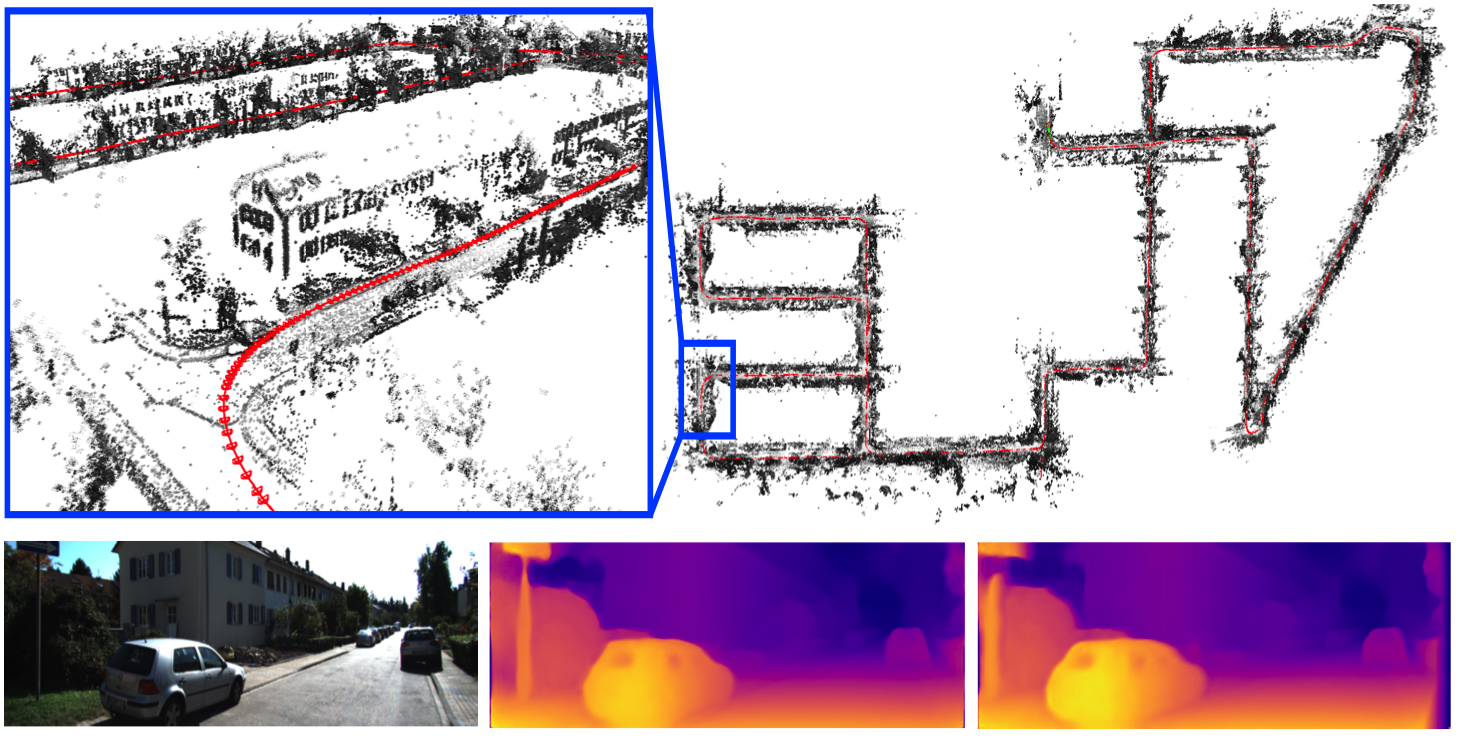

- DVSO: Deep Virtual Stereo Odometry In this project we design a novel deep network and train it in a semi-supervised way to predict depth map from single image, and integrate the depth map into DSO as virtual stereo measurement. Being a monocular VO approach, DVSO achieves comparable performance to the state-of-the-art stereo methods. (Project Page)

- D3VO: Deep Depth, Pose and Uncertainty for Monocular Visual Odometry. In addition to integrate the depth maps generated by a network into DSO, like was done in DVSO, in this work we further learn to predict camera poses and uncertainty maps and fuse them into DSO. For details please visit the Project Page.

Camera Calibration

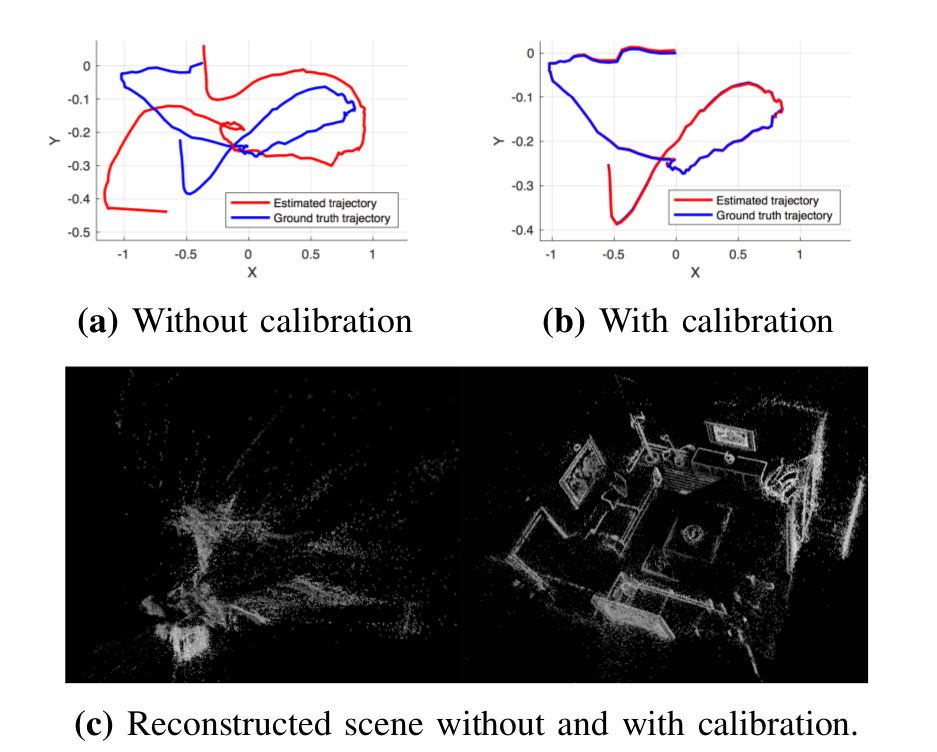

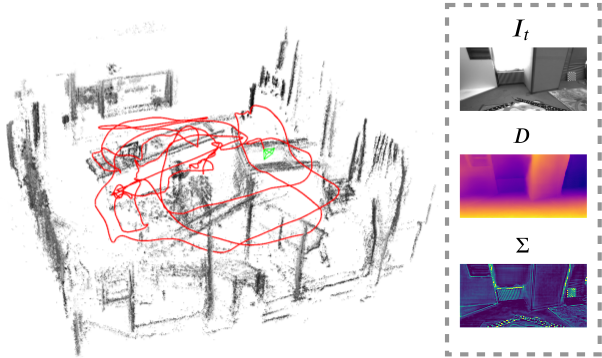

- Online Photometric Calibration We've conducted a project to achieve online photometric calibration, where the exposure times of consecutive frames, the camera response function, and the camera vignetting factors can be recovered in real-time. Experiments show that our estimations converge to the ground truth after only a few seconds. Our approach can be used either offline for calibrating existing datasets, or online in combination with state-of-the-art direct visual odometry or SLAM pipelines. For more details please check our paper "Online Photometric Calibration of Auto Exposure Video for Realtime Visual Odometry and SLAM". (Project Page)

Teaching

- Summer Semester 2016 Computer Vision II: Multiple View Geometry (IN2228)

- Summer Semester 2017 Computer Vision II: Multiple View Geometry (IN2228) Best Elective Lecture Award

- Winter Semester 2017/18 Robotic 3D Vision

Service

- Conference reviewer: CVPR, ICCV, ECCV, ICRA, IROS, AAAI

- Journal reviewer: RA-L, T-RO, AURO, TMM, P&RS

- Organizer: ECCV 2020 Workshop on Map-based Localization for Autonomous Driving

Publications

Export as PDF, XML, TEX or BIB

Journal Articles

2018

[]

Online Photometric Calibration of Auto Exposure Video for Realtime Visual Odometry and SLAM , In IEEE Robotics and Automation Letters (RA-L), volume 3, 2018. (This paper was also selected by ICRA'18 for presentation at the conference.[arxiv][video][code][project])

ICRA'18 Best Vision Paper Award - Finalist []

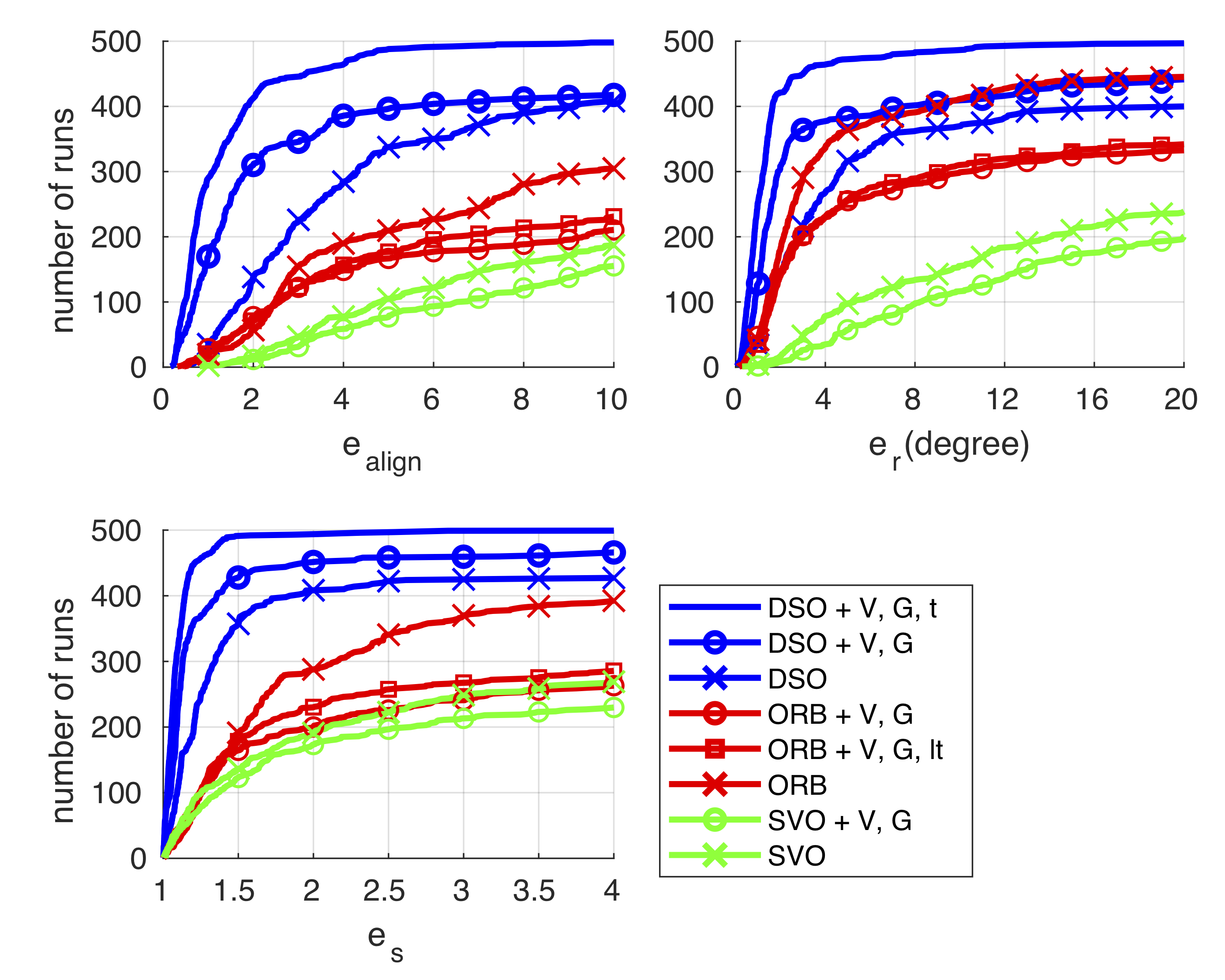

Challenges in Monocular Visual Odometry: Photometric Calibration, Motion Bias and Rolling Shutter Effect , In In IEEE Robotics and Automation Letters (RA-L) & Int. Conference on Intelligent Robots and Systems (IROS), volume 3, 2018. ([arxiv])

Preprints

2022

[]

4Seasons: Benchmarking Visual SLAM and Long-Term Localization for Autonomous Driving in Challenging Conditions , In arXiv preprint arXiv:2301.01147, 2022.

Conference and Workshop Papers

2024

[]

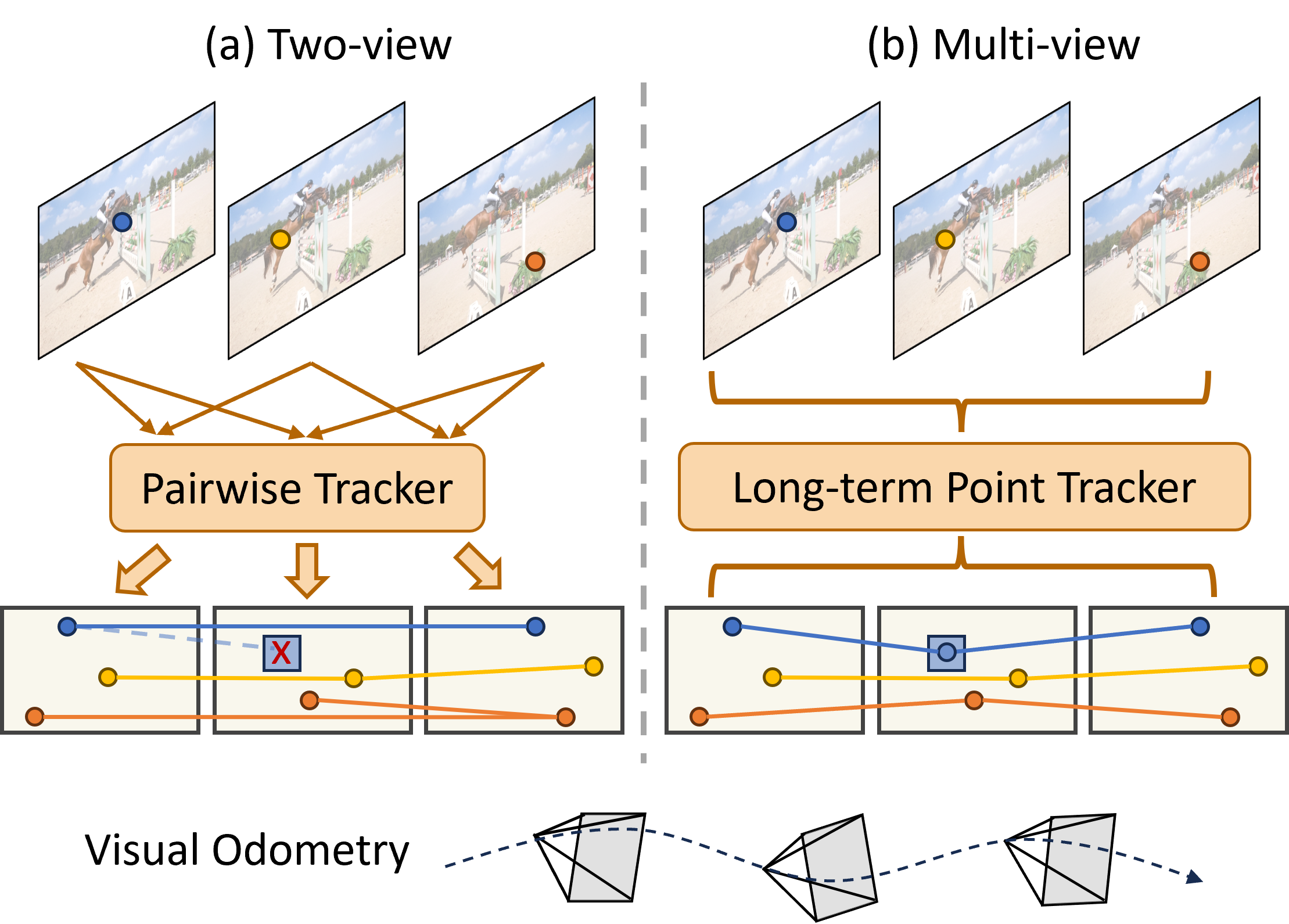

LEAP-VO: Long-term Effective Any Point Tracking for Visual Odometry , In , 2024.

2023

[]

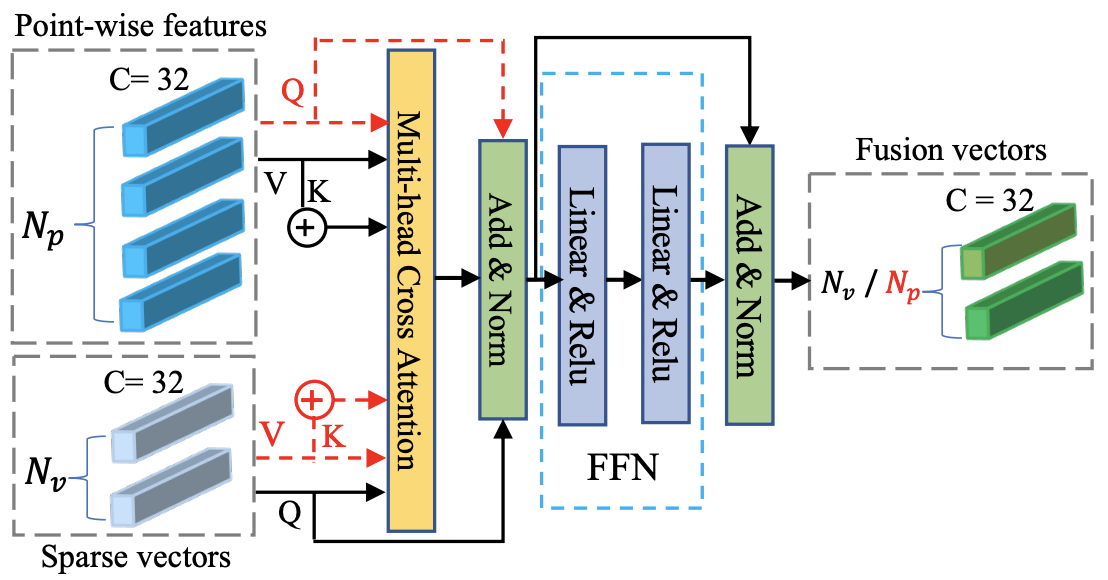

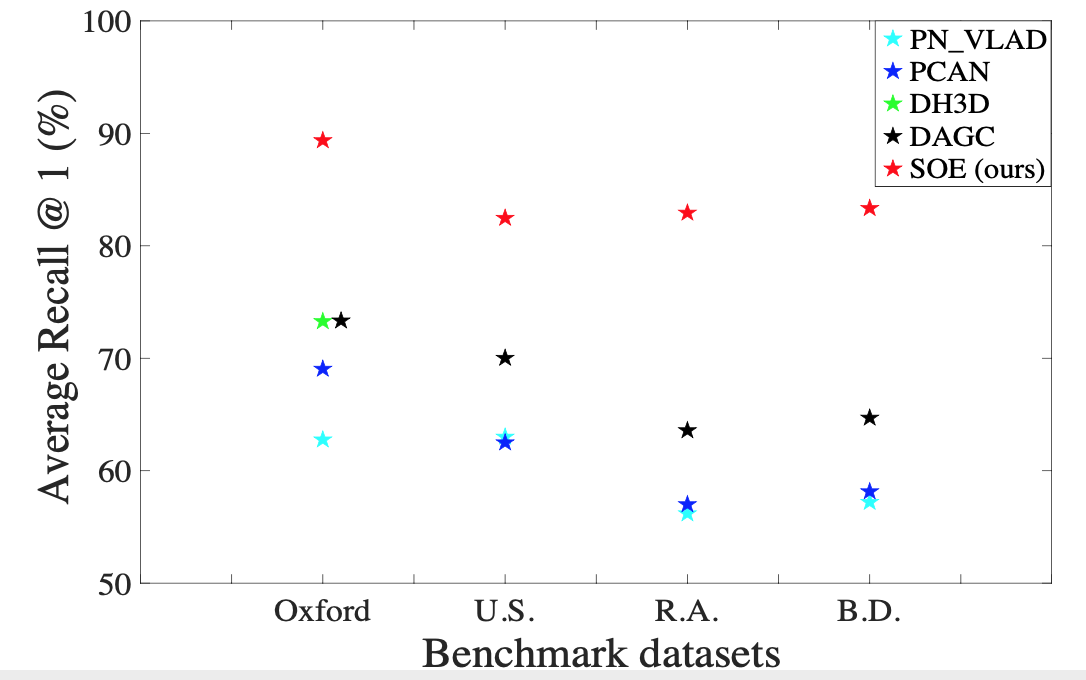

CASSPR: Cross Attention Single Scan Place Recognition , In IEEE International Conference on Computer Vision (ICCV), 2023. ([code])

2021

[]

SOE-Net: A Self-Attention and Orientation Encoding Network for Point Cloud based Place Recognition , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021. ([arxiv])

Oral Presentation []

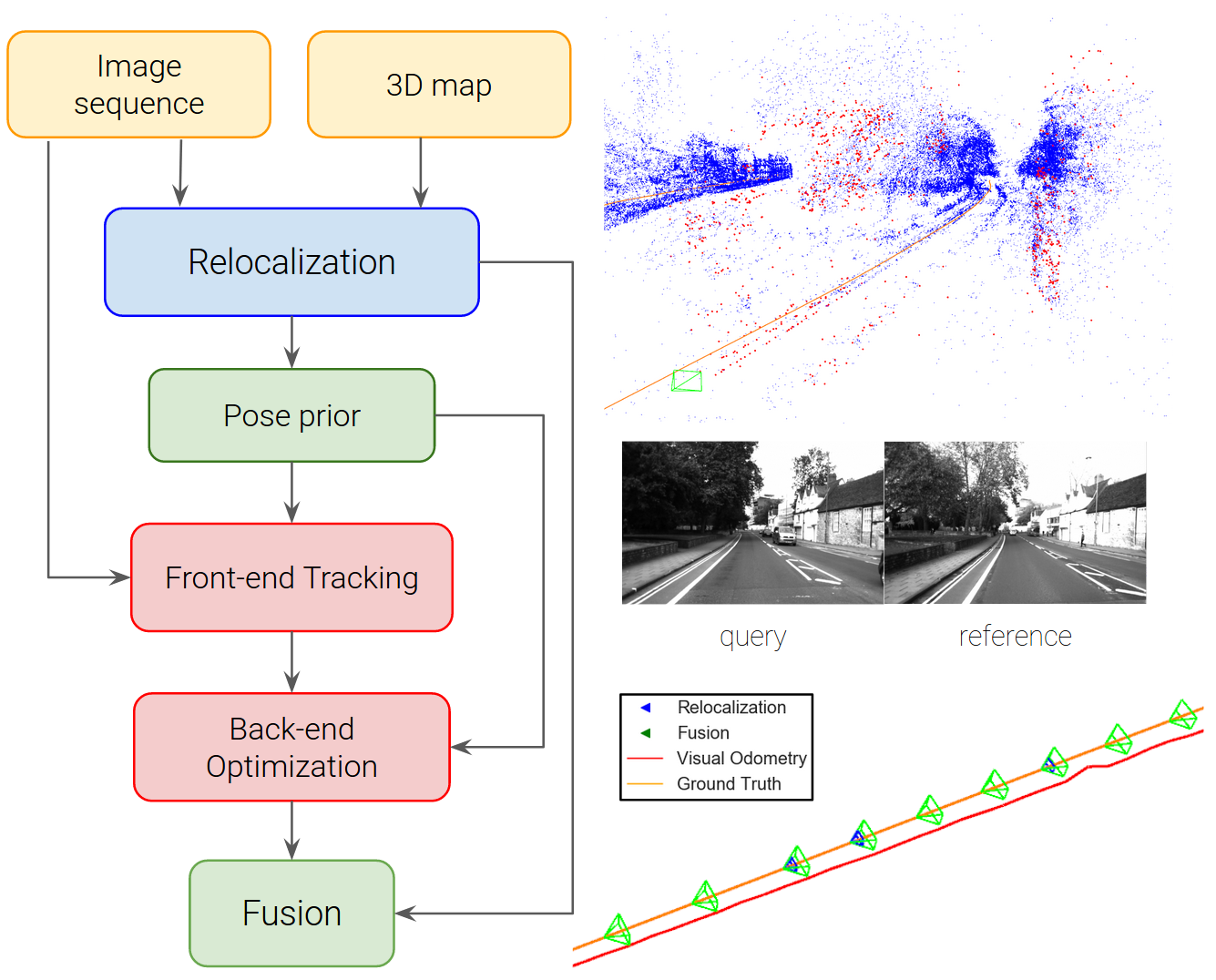

Tight Integration of Feature-based Relocalization in Monocular Direct Visual Odometry , In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2021. ([project page])

2020

[]

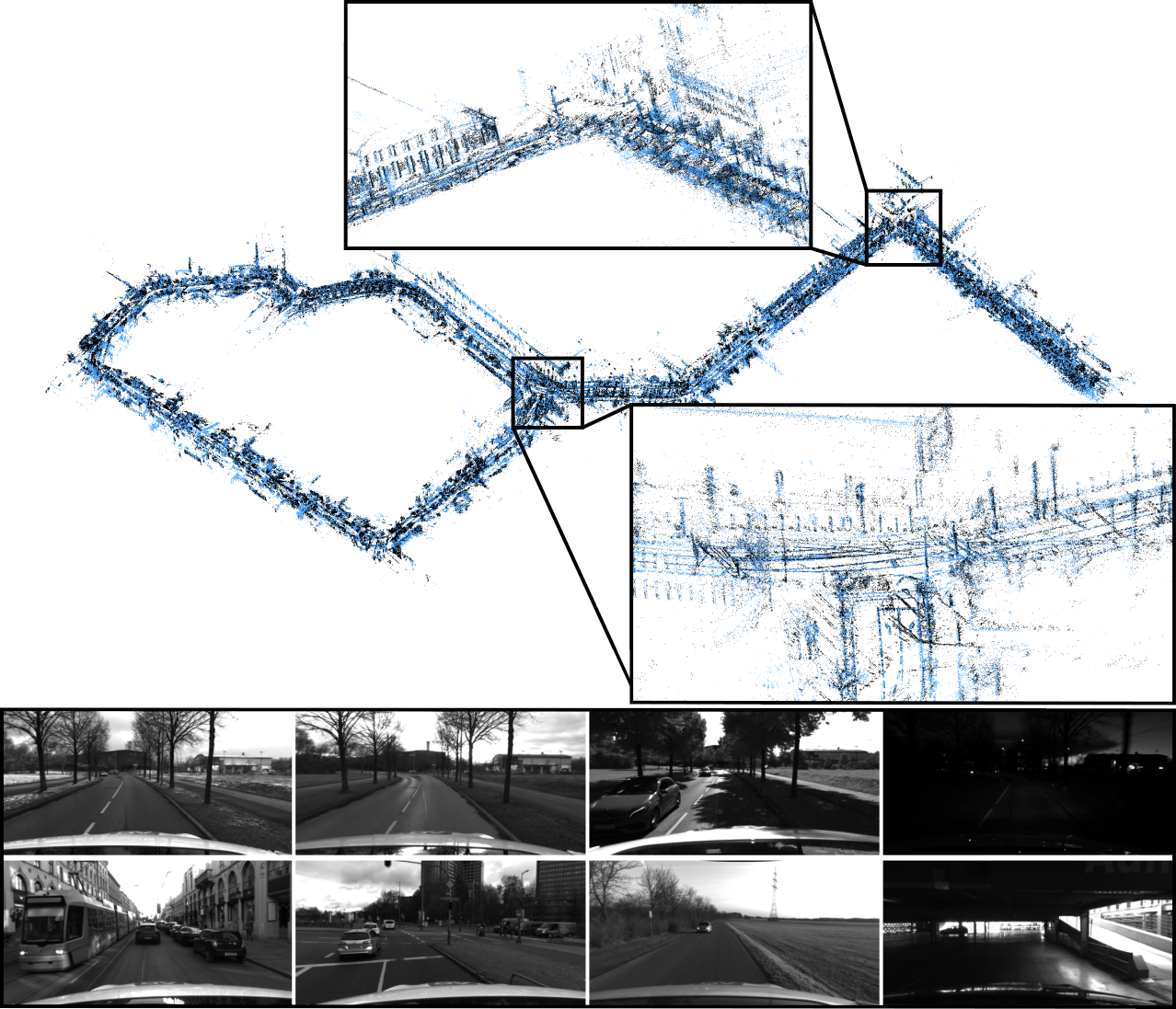

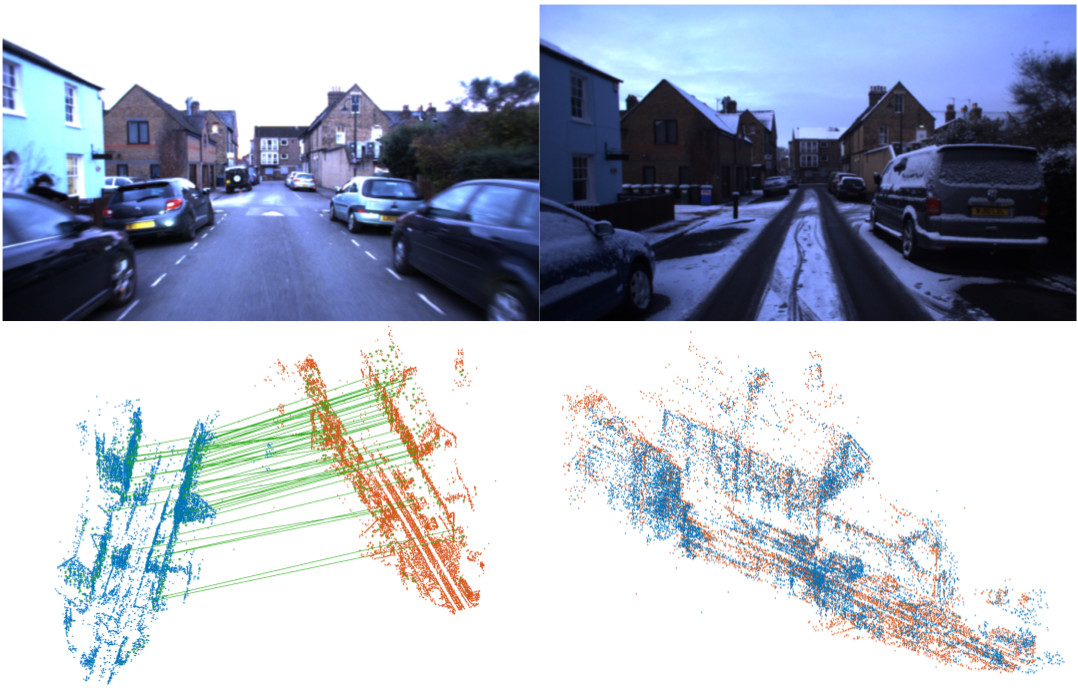

4Seasons: A Cross-Season Dataset for Multi-Weather SLAM in Autonomous Driving , In Proceedings of the German Conference on Pattern Recognition (GCPR), 2020. ([project page][arXiv][video])

[]

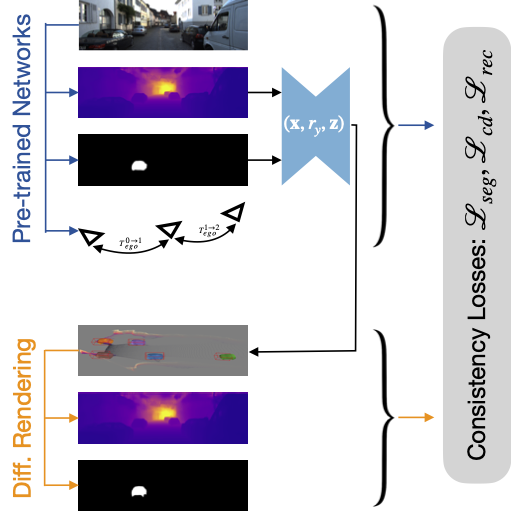

Learning Monocular 3D Vehicle Detection without 3D Bounding Box Labels , In Proceedings of the German Conference on Pattern Recognition (GCPR), 2020. ([project page][video])

[]

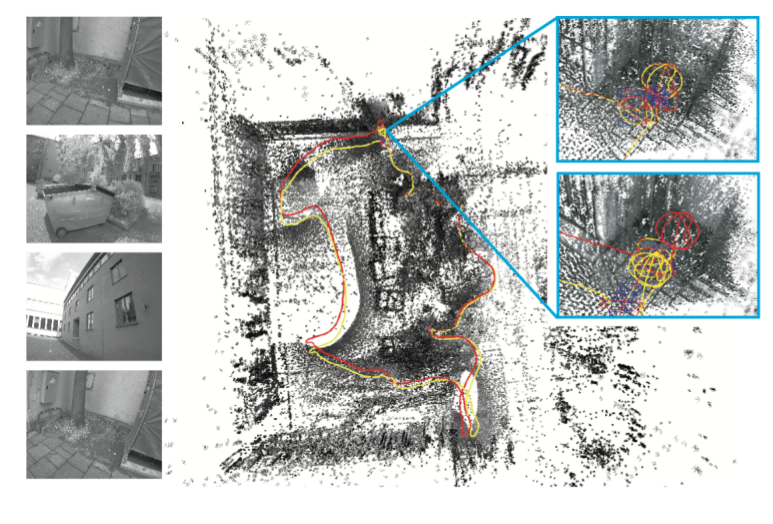

DH3D: Deep Hierarchical 3D Descriptors for Robust Large-Scale 6DoF Relocalization , In European Conference on Computer Vision (ECCV), 2020. ([project page][code][supplementary][arxiv])

Spotlight Presentation []

D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Oral Presentation []

DirectShape: Photometric Alignment of Shape Priors for Visual Vehicle Pose and Shape Estimation , In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2020. ([video][presentation][project page][supplementary][arxiv])

2018

[]

Deep Virtual Stereo Odometry: Leveraging Deep Depth Prediction for Monocular Direct Sparse Odometry , In European Conference on Computer Vision (ECCV), 2018. ([arxiv],[supplementary],[project])

Oral Presentation []

LDSO: Direct Sparse Odometry with Loop Closure , In International Conference on Intelligent Robots and Systems (IROS), 2018. ([arxiv][video][code][project])

2017

[]

Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras , In International Conference on Computer Vision (ICCV), 2017. ([supplementary][video][arxiv][project])