This is an old revision of the document!

Visual-Inertial Dataset

Contact : David Schubert, Nikolaus Demmel, Vladyslav Usenko.

Visual odometry and SLAM methods have a large variety of applications in domains such as augmented reality or robotics. Complementing vision sensors with inertial measurements tremendously improves tracking accuracy and robustness, and thus has spawned large interest in the development of visual-inertial (VI) odometry approaches. In this paper, we propose the TUM VI benchmark, a novel dataset with a diverse set of sequences in different scenes for evaluating VI odometry. It provides camera images with 1024x1024 resolution at 20Hz, high dynamic range and photometric calibration. An IMU measures accelerations and angular velocities on 3 axes at 200Hz, while the cameras and IMU sensors are time-synchronized in hardware. For trajectory evaluation, we also provide accurate pose ground truth from a motion capture system at high frequency (120Hz) at the start and end of the sequences which we accurately aligned with the camera and IMU measurements. The full dataset with raw and calibrated data will be made publicly available. We also evaluate state-of-the-art VI odometry approaches on our dataset.

Dataset Sequences

We provide several types of sequences for evaluating the Visual-Intertial odometry algorithms:

- room : Sequences in the room with Motion Capture system, where ground truth poses are available for the entire trajectory.

- corridor : Sequences in the corridor and several offices where ground truth poses are available for the start and end segments.

- magistrale : Sequences in the large hall. Ground truth poses are available for the start and end segments.

- outdoors : Outdoor sequences. Ground truth poses are available for the start and end segments.

- slides : Specially changeling sequences which contain sliding down in a closed tube with very bad illumination inside. Ground truth poses are available for the start and end segments.

All sequences have been processed to have:

- consistent timestamps for camera, IMU, and ground truth poses.

- proper IMU scaling and axis alignment.

- ground truth poses in the IMU frame.

| Sequence name | Download processed dataset 1024x1024 | Download processed dataset 521x512 | Download raw dataset | Video | Video 5x |

| corridor1 | bag (21.54GB) |

bag (5.40GB) |

bag (21.54GB) |

play | play (5x) |

| corridor4 | bag (22.80GB) |

bag (5.72GB) |

bag (22.80GB) |

play | play (5x) |

| corridor5 | bag (23.93GB) |

bag (6.00GB) |

bag (23.93GB) |

play | play (5x) |

| magistrale2 | bag (16.96GB) |

bag (4.25GB) |

bag (16.96GB) |

play | play (5x) |

| magistrale3 | bag (31.88GB) |

bag (7.99GB) |

bag (31.88GB) |

play | play (5x) |

| outdoors3 | bag (59.48GB) |

bag (14.91GB) |

bag (59.48GB) |

play | play (5x) |

| outdoors4 | bag (56.13GB) |

bag (14.07GB) |

bag (56.13GB) |

play | play (5x) |

| room1 | bag (10.33GB) |

bag (2.59GB) |

bag (10.33GB) |

play | play (5x) |

| room2 | bag (9.19GB) |

bag (2.31GB) |

bag (9.19GB) |

play | play (5x) |

| room3 | bag (12.21GB) |

bag (3.06GB) |

bag (12.21GB) |

play | play (5x) |

| slides1 | bag (18.53GB) |

bag (4.65GB) |

bag (18.53GB) |

play | play (5x) |

| slides2 | bag (17.10GB) |

bag (4.29GB) |

bag (17.10GB) |

play | play (5x) |

Calibration Sequences

We provide several types of calibration sequences:

- cam-calib : for camera calibration, i.e. for the intrinsic camera parameters and the relative pose between two cameras. The grid of AprilTags has been recorded at low frame rate with changing viewpoints.

- imu-calib : calibration sequence to find the relative pose between cameras and imu. Includes rapid motions in front of the April grid exciting all 6 degrees of freedom. A smaller exposure has been chosen to avoid motion blur.

- vignette-calib : for vignette calibration. Features motion in front of a white wall with a calibration tag in the middle.

- imu-static : IMU data only, to estimate noise and random walk parameters of the IMU. The setup is standing still for 21 hours.

| Sequence name | Download processed dataset 1024x1024 | Download processed dataset 521x512 | Download raw dataset | Video | Video 5x |

| calib-cam1 | bag (0.74GB) |

bag (0.19GB) |

bag (0.74GB) |

play | play (5x) |

| calib-cam2 | bag (0.52GB) |

bag (0.13GB) |

bag (0.52GB) |

play | play (5x) |

| calib-cam3 | bag (1.34GB) |

bag (0.34GB) |

bag (1.34GB) |

play | play (5x) |

| calib-cam4 | bag (1.25GB) |

bag (0.32GB) |

bag (1.25GB) |

play | play (5x) |

| calib-cam5 | bag (0.94GB) |

bag (0.24GB) |

bag (0.94GB) |

play | play (5x) |

| calib-cam6 | bag (0.92GB) |

bag (0.23GB) |

bag (0.92GB) |

play | play (5x) |

| calib-imu1 | bag (5.41GB) |

bag (1.36GB) |

bag (5.41GB) |

play | play (5x) |

| calib-imu2 | bag (3.90GB) |

bag (0.98GB) |

bag (3.90GB) |

play | play (5x) |

| calib-imu3 | bag (2.87GB) |

bag (0.72GB) |

bag (2.87GB) |

play | play (5x) |

| calib-imu4 | bag (3.78GB) |

bag (0.95GB) |

bag (3.78GB) |

play | play (5x) |

| calib-imu5 | bag (3.33GB) |

bag (0.83GB) |

bag (3.33GB) |

play | play (5x) |

| calib-imu6 | bag (3.59GB) |

bag (0.90GB) |

bag (3.59GB) |

play | play (5x) |

| calib-vignette1 | bag (0.49GB) |

bag (0.12GB) |

bag (0.49GB) |

play | play (5x) |

| calib-vignette2 | bag (0.75GB) |

bag (0.19GB) |

bag (0.75GB) |

play | play (5x) |

| calib-vignette3 | bag (0.67GB) |

bag (0.17GB) |

bag (0.67GB) |

play | play (5x) |

Hardware Description

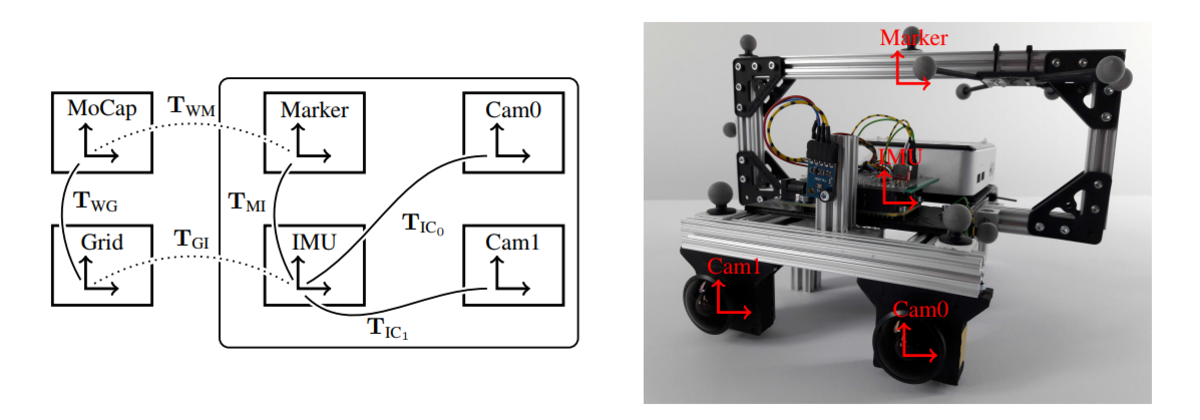

Our sensor setup consists of two monochrome cameras in a stereo setup and an IMU. The IMU is rigidly connected to the two cameras and several IR-reflective markers which allow for pose tracking of the sensor setup by the motion capture (MoCap) system. For calibrating the camera intrinsics and the extrinsics of the sensor setup, we use a grid of AprilTags which has a fixed pose in the MoCap reference (world) system.

Geometric calibration

To perform geometric calibration the dataset contains several calibration sequences (calib-cam). They contain slow motions and well exposed calibration pattern (AprilGrid) such that camera intrinsics and extrinsics can be estimated. As an example, we provide calibration files generated with kalibr for omidirectional and pinhole camera models.

Photometric calibration

All images in the dataset have 16-bit intensity depth and linear response function. Vignetting calibration using the method from TUM Monocular dataset is provided here. We also include vignette calibration datasets (calib-vignette). This makes it possible to repeat the calibration procedure or use alternative tools for vignetting estimation.

IMU Noise Density

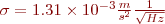

The numerical values of noise densities  can be found at an integration time of

can be found at an integration time of  on the straight line with slope

on the straight line with slope  , while bias parameters

, while bias parameters  are identified as the value on the straight line with slope

are identified as the value on the straight line with slope  at an integration time of

at an integration time of  . This results in

. This results in  ,

,  for the accelerometer and

for the accelerometer and  ,

,  for the gyroscope.

for the gyroscope.

Evaluation Results

Raw Data

Time Alignment and Hand-Eye Calibration

To estimate time offsets and transformation between marker and IMU frame we formulate a least squares problem that jointly estimates those variables.